Literature on Neural Architecture Search (NAS) has seen a lot of focus on optimizing the architecture for a single objective – the predictive performance of the model. However, there are other important considerations, such as reducing the model’s size for deployment on smaller, less powerful hardware. NAS also demands significant computational resources.

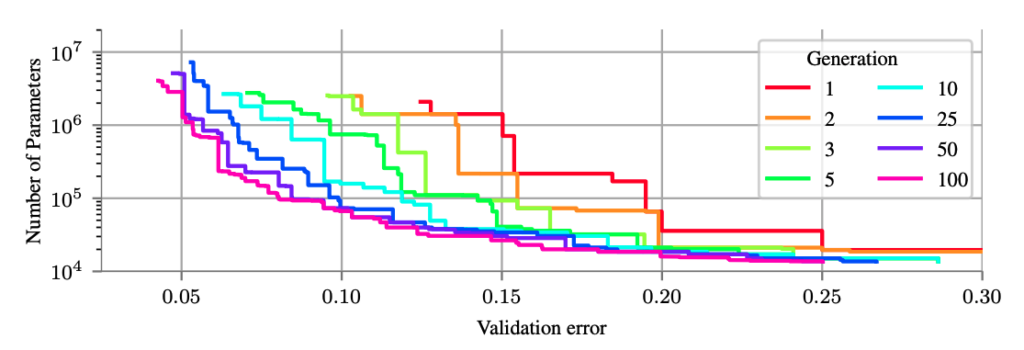

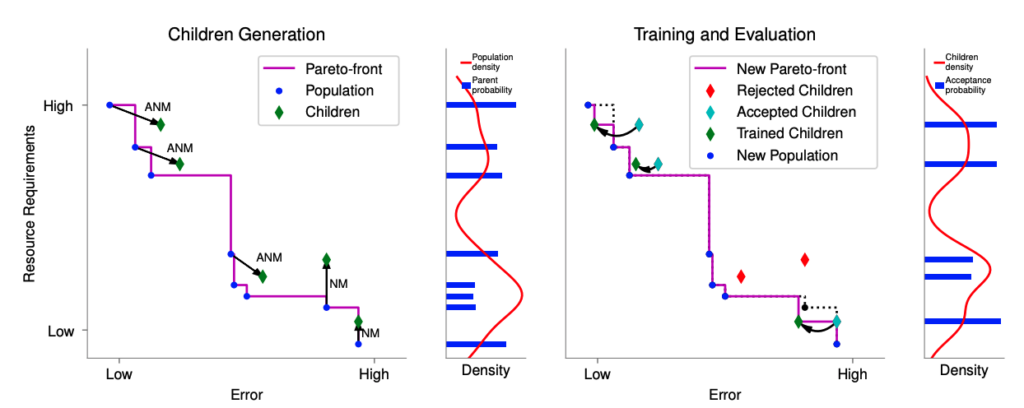

In our ICLR 2019 paper, “Efficient Multi-objective Neural Architecture Search via Lamarckian Evolution” we address these challenges simultaneously. LEMONADE (Lamarckian Evolutionary algorithm for Multi-Objective Neural Architecture DEsign) employs an evolutionary algorithm that utilizes network morphisms (NM) and approximate network morphisms (ANM) as mutation strategies to generate new architectural variations, and maintains a Pareto front of models. Morphisms maintain the model’s function mapping while increasing its size, whereas approximate morphisms shrink the model size while approximating its function mapping. These morphisms eliminate the need to train the newly generated child models from scratch.

To guide the evolution process, we categorize the model’s objectives as either “cheap” or “expensive” tasks. Cheap tasks involve evaluating resource requirements, while expensive tasks pertain to assessing predictive performance. Our method leverages inexpensive evaluations to identify promising candidates for more resource-intensive evaluations, resulting in substantial computational savings.