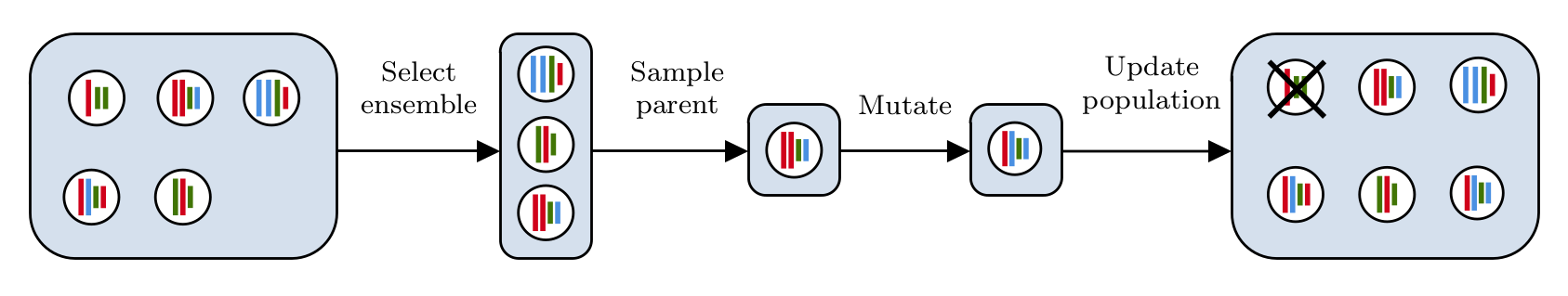

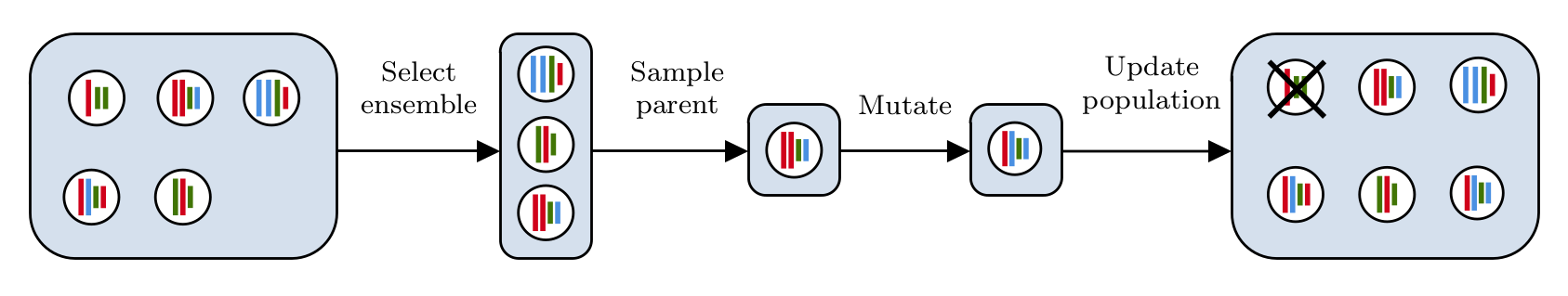

Neural Ensemble Search for Uncertainty Estimation and Dataset Shift

Posted on December 1, 2020 by

In many real world scenarios, deep learning models such as neural networks are deployed to make predictions on data coming from a shifted distribution (aka covariate shift) or out-of-distribution (OOD) data not at all represented in the training set. Examples include blurred or noisy images, unknown objects in images or videos, a new frequency band […]

Read More

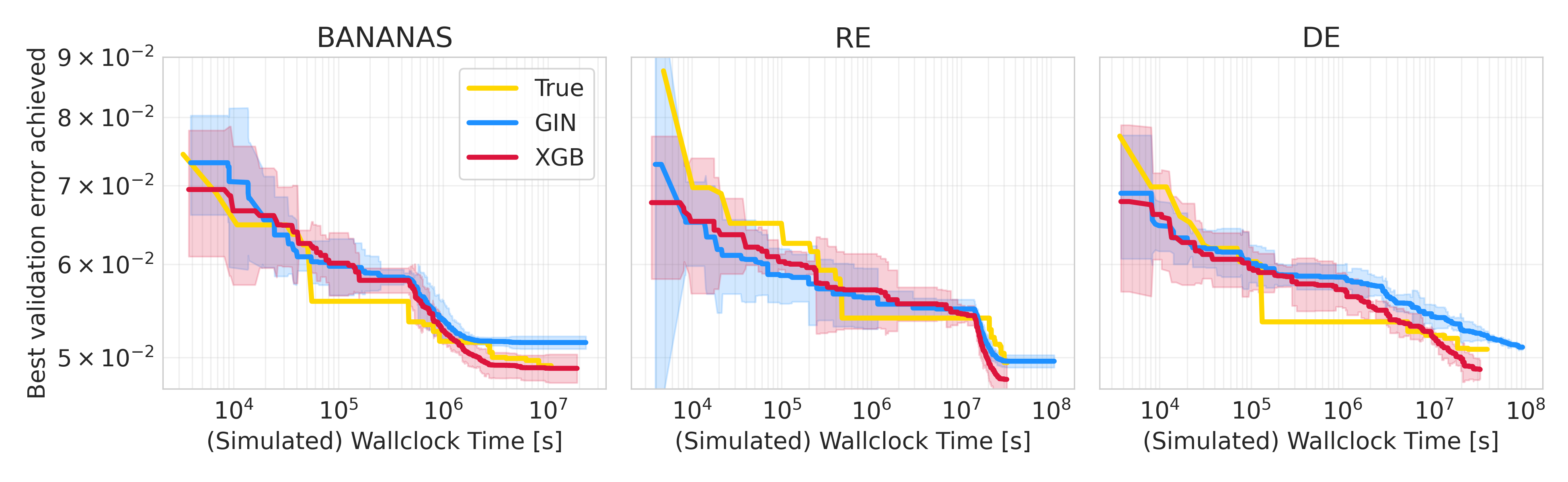

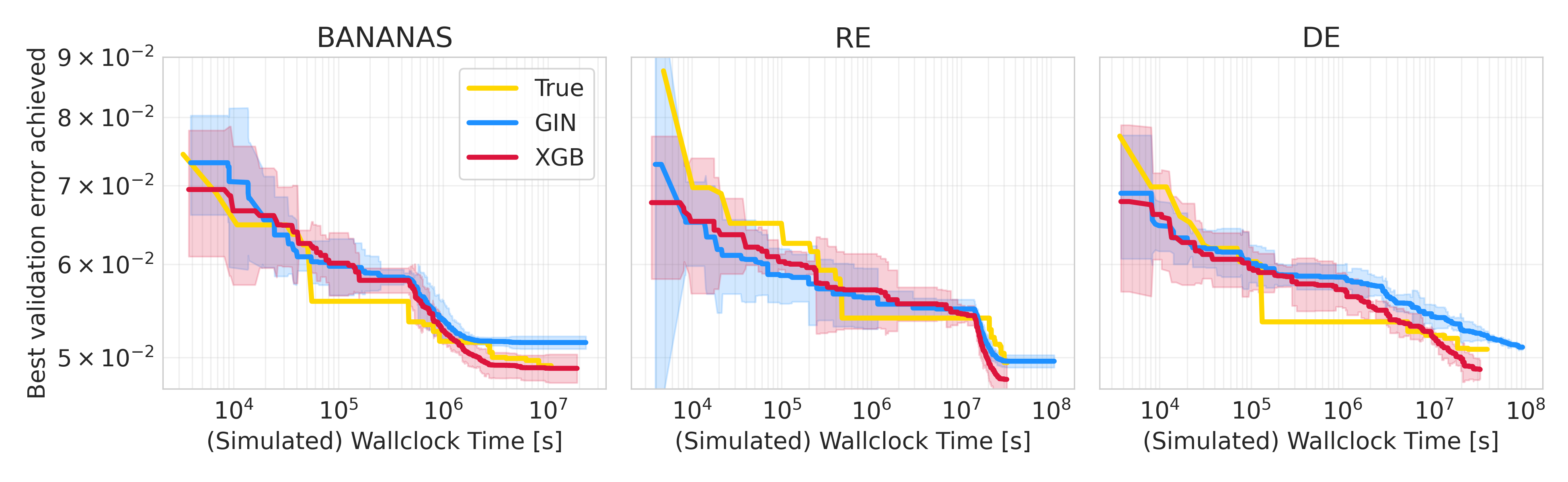

NAS-Bench-301 and the Case for Surrogate NAS Benchmarks

Posted on October 9, 2020 by

The Need for Realistic NAS Benchmarks Neural Architecture Search (NAS) is a logical next step in representation learning as it removes human bias from architecture design, similar to deep learning removing human bias from feature engineering. As such, NAS has experienced rapid growth in recent years, leading to state-of-the-art performance on many tasks. However, empirical […]

Read More

RobustDARTS

Posted on October 21, 2019 by

By Understanding and Robustifying Differentiable Architecture Search Optimizing in the search of neural network architectures was initially defined as a discrete problem which intrinsically required to train and evaluate thousands of networks. This of course required huge amount of computational power, which was only possible for few institutions. One-shot neural architecture search (NAS) democratized this […]

Read More

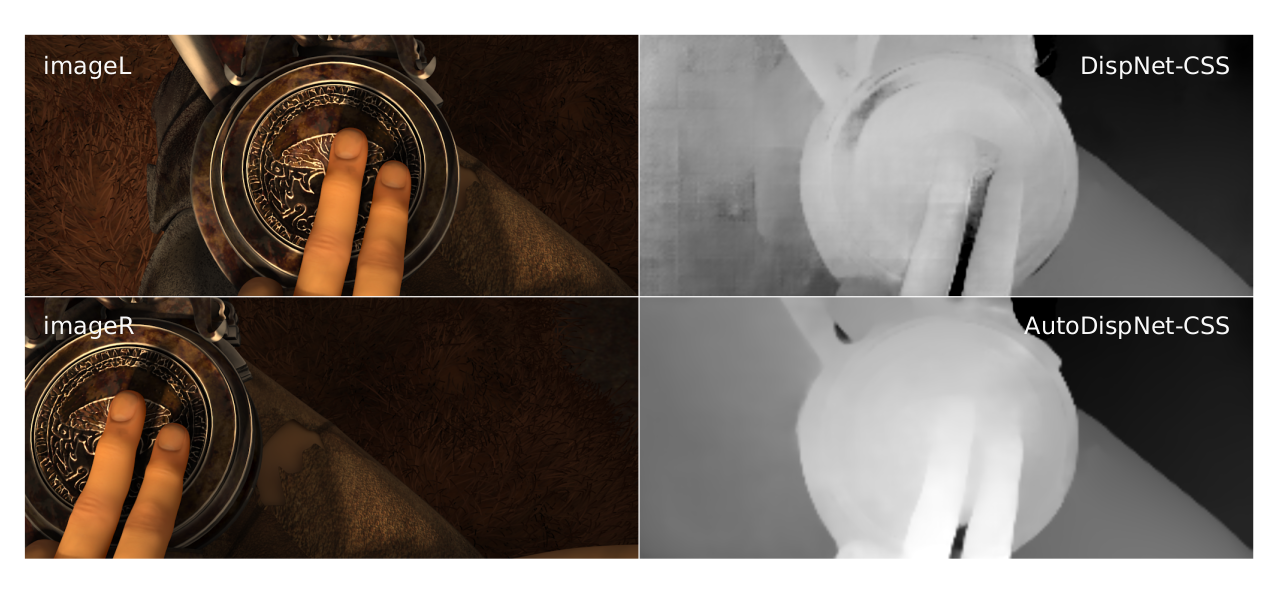

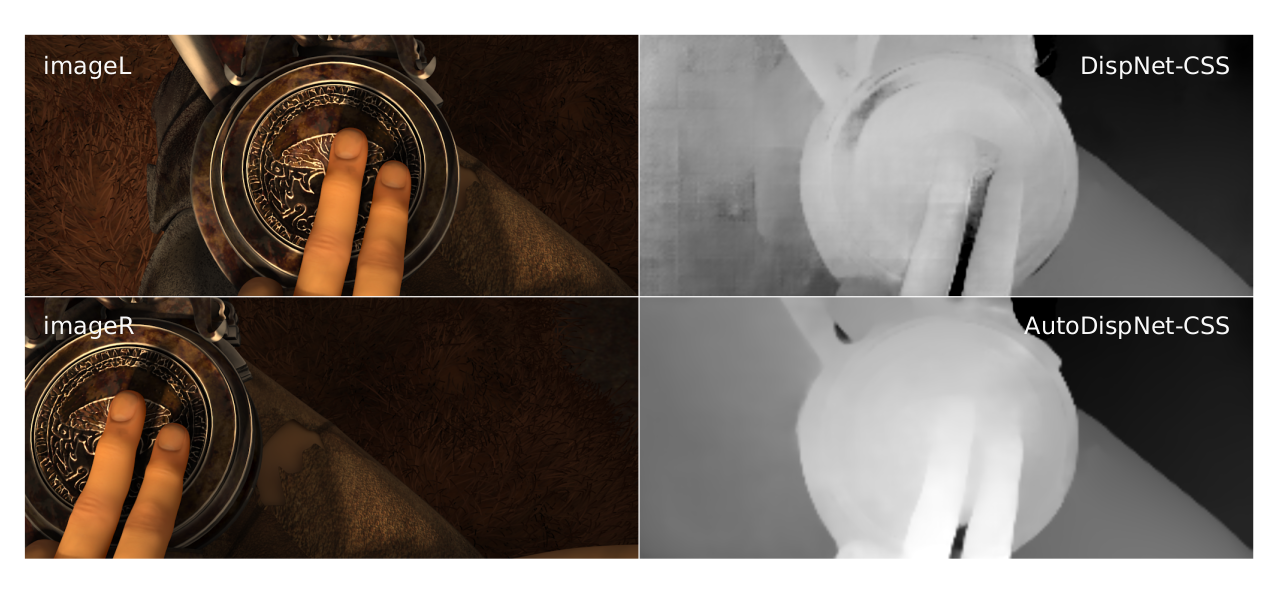

AutoDispNet: Improving Disparity Estimation with AutoML

Posted on August 30, 2019 by

By Compared to the state of computer vision 20 years ago, deep learning has enabled more generic methodologies that can be applied to various tasks by automatically extracting meaningful features from the data. However, in practice those methodologies are not as generic as it looks at first glance. While standard neural networks may lead to […]

Read More